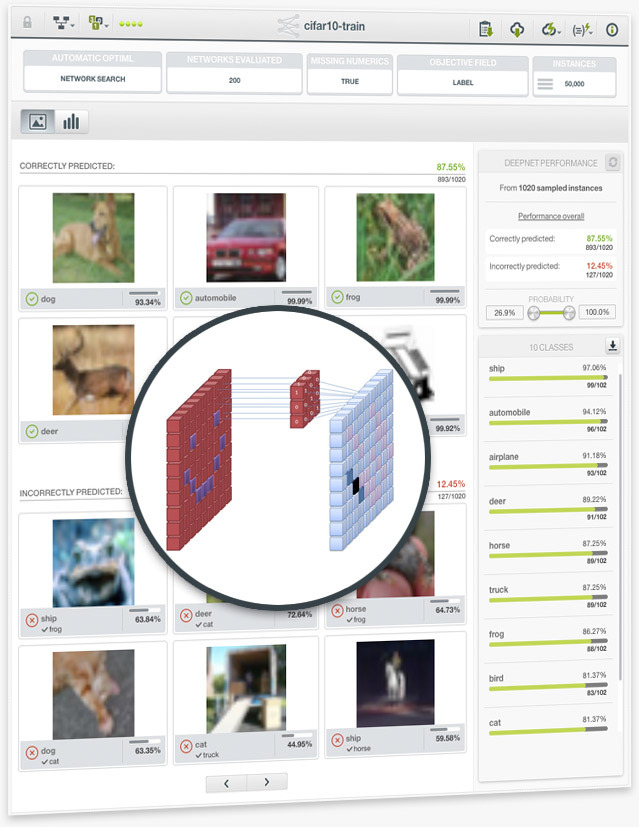

BigML introduces Convolutional Neural Network (CNN), the most popular machine learning technique for image classification.

When a dataset contains images and it is used to train a Deepnet, BigML’s resource for deep neural networks, the Deepnet will be a CNN. The BigML platform abstracts away hardware complexity so users do not need to worry about infrastructure setup such as GPU installation. Moreover, just like any other model, users can employ 1-click CNN and automatic parameter optimization to accommodate their use cases.

Please visit the Image Processing page to learn more.

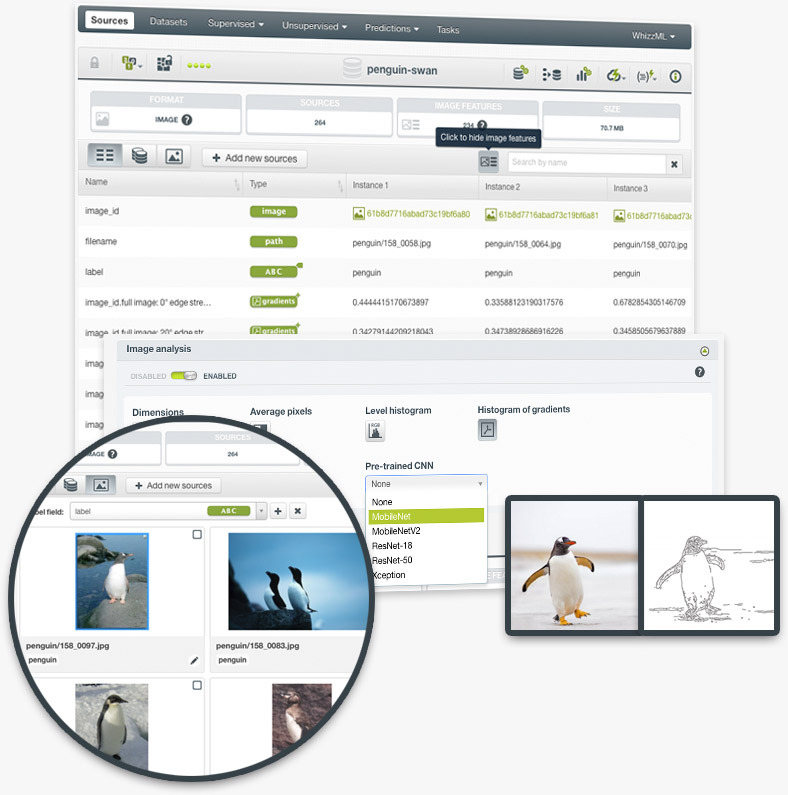

The inclusion of configurable image features makes machine learning with images beautifully simple for everyone. Users can use image features to train all sorts of models, both supervised and unsupervised, greatly expanding their business scopes and enhancing their Machine Learning workflows.

Image features are sets of numeric fields extracted from images. They can capture parts or patterns of images, such as edges, colors, and textures. The image features extracted by pre-trained CNNs capture more complex patterns. Now, BigML allows users to configure different image features at the source level.

Please visit the Image Processing release page to learn more.

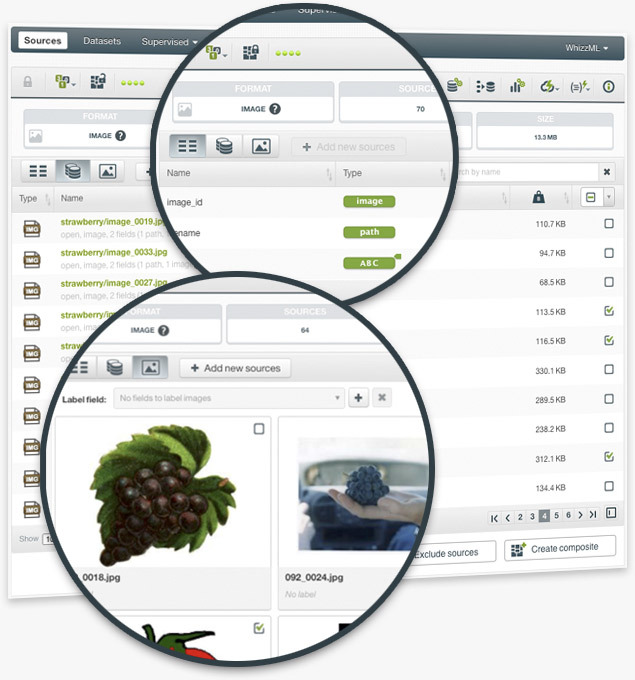

BigML introduces composite sources, a new type of source that augments its Machine Learning capabilities. Image composite sources are collections of images, and play a central role in BigML Image Processing. As such, users can preview, add or remove images in composite sources, as well as extract image features and add labels.

A composite source is a collection of other sources, called components. The power and flexibility of a composite source lie in its ability to allow many types of component sources, including other composite sources. Furthermore, users can manipulate composite sources by performing operations on their components, such as addition and exclusion.

You can find more details about composite sources here.

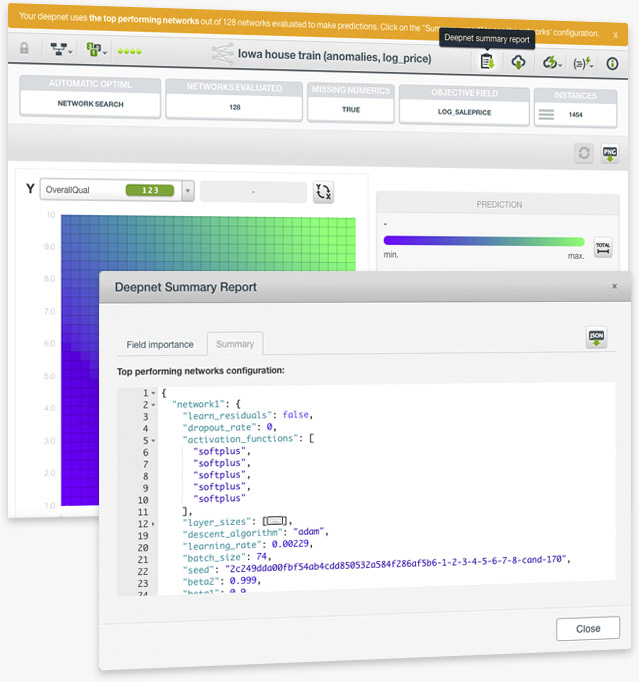

When you use the Automatic Network Search option to find the optimal parametrization of your deepnets, the final deepnet is usually composed of multiple networks with different configurations (read the section 4.4.2 Automatic Parameter Optimization of the deepnet document). These configuration parameters were hidden to the user until now. To address the requests from our most technical customers and provide a higher level of interpretability, BigML displays the configuration for each of the networks composing a deepnet created with this automatic option.

Please read the section 4.5.2.2 Summary of the deepnet documentation to learn more

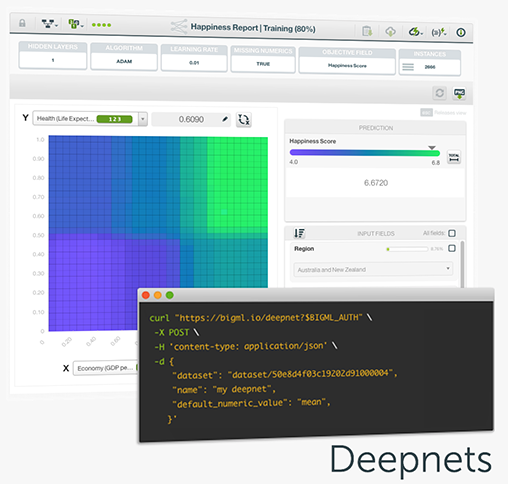

BigML is proud to announce Deepnets, an optimized version of Deep Neural Networks, the machine-learned models loosely inspired by the neural circuitry of the human brain. Deepnets are state-of-the-art in many important supervised learning applications. To avoid the difficult and time-consuming work of hand-tuning the algorithm, BigML’s unique implementation of Deep Neural Networks offers first-class support for automatic network search and parameter optimization. BigML makes it easier for you by searching over all possible networks for your dataset and returning the best network found to solve your problem. Thus, non-experts can train deep learning models with results matching that of top-level data scientists.