Automate the entire Machine Learning life-cycle

Focus on solving your business problems instead of building and maintaining cumbersome open source solutions and the underlying infrastructure. BigML Ops embodies an end-to-end Machine Learning lifecycle management approach extending the BigML platform with automatic model monitoring and retraining capabilities.

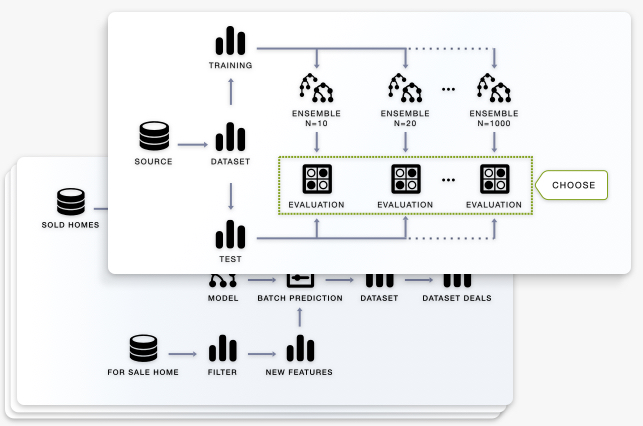

Automatic Model Building

Models built and managed on the BigML platform meet the critical requirements for enterprise-grade machine learning out-of-the-box: interpretability, explainability, and reproducibility. Unlike stovepipe systems, models trained and operationalized on the same platform afford superior continuity in tracing provenance and troubleshooting downstream production issues. The most sophisticated workflows can be implemented using BigML workflows that can be executed in one click or one API call.

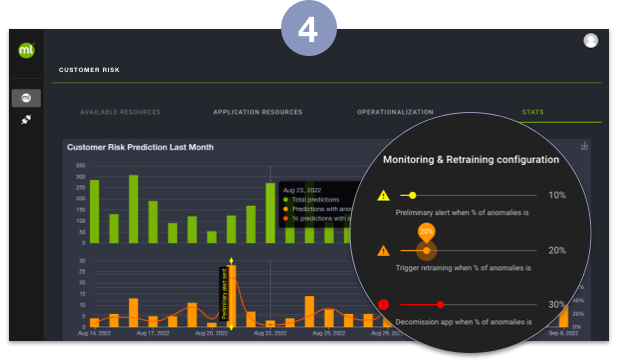

Automatic Model Monitoring

Closely monitor each model's performance both at the physical level (prediction speed, CPU/GPU or memory consumption) and at the logical level. Each model is automatically paired with an anomaly detector that determines the competence of the paired model to help you proactively manage any business or compliance risks tied to predictions.

Automatic Model Retraining

Immediately find out when the performance of your models dip below predetermined thresholds and trigger a new learning workflow rerun. Save time, limit errors, preserve operational agility and avoid outage whenever significant shifts in data distribution are detected or the model performance decays past acceptable levels.

How BigML Ops works

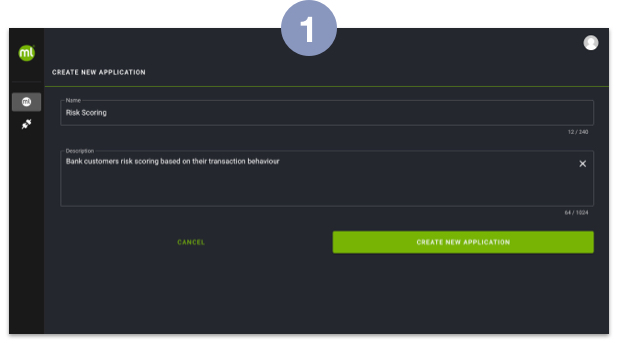

Define a Machine Learning application

Define a Machine Learning application

BigML Ops provides an intuitive user experience that helps DevOps and Machine Learning engineers to easily operate many applications simultaneously.

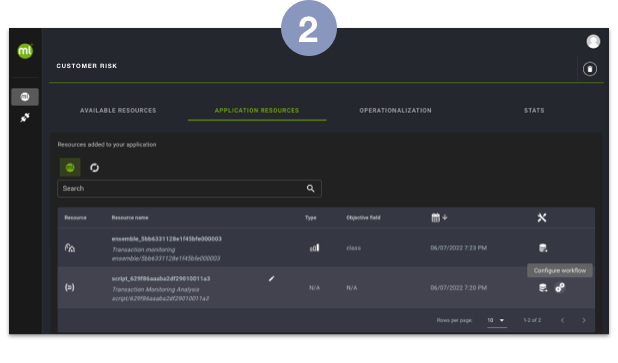

Add all the required workflows and resources

Add all the required workflows and resources

Easily add one or more workflows along with all the related resources (e.g., supervised and unsupervised models), define input/output data schemas and default values.

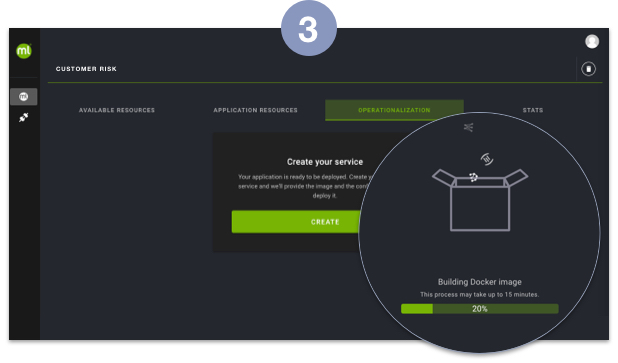

Automatically containerize your application

Automatically containerize your application

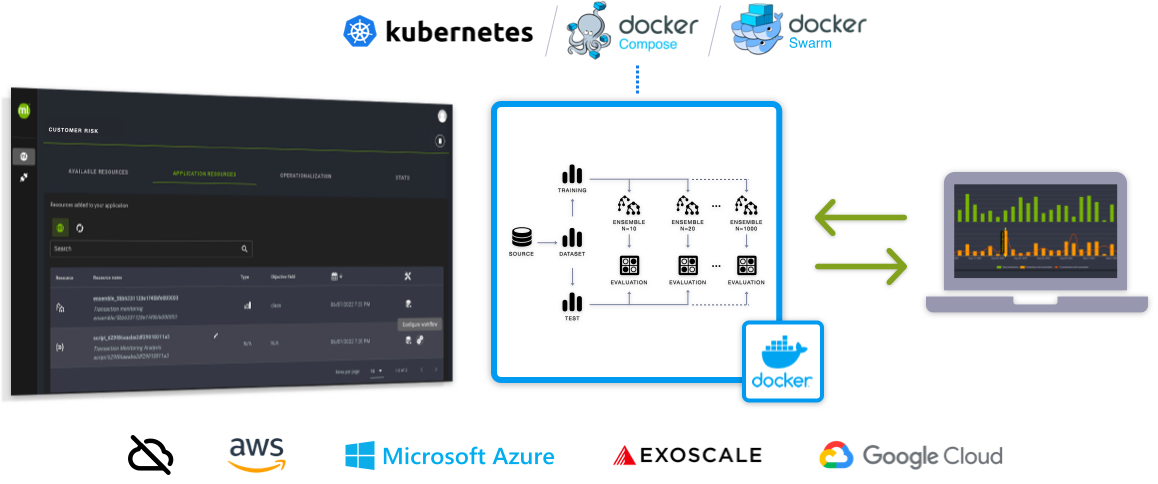

BigML Ops helps you create a containerized package for your application with a single click. Easily deploy and scale your container within any target environment supporting Kubernetes as it includes all the software components needed to deliver millions of predictions in production.

Automatically deploy and monitor your application

Automatically deploy and monitor your application

All workflow activity is constantly monitored and vital signs are displayed in the BigML Ops dashboard for visibility and proactive lifecycle management.

Operationalize Advanced Machine Learning Workflows, Not only Single Models.

Most companies starting their Machine Learning journeys struggle to bring their predictive models built on top of a collection of open source tools and libraries to production. Those that manage to do so, soon realize that this brittle, glue code driven ML Ops approach fails to scale to many more models and keeps accruing technical debt over time.

In contrast, BigML Ops focuses on systematically operationalizing entire workflows with built in reproducibility and traceability.

Automatically Monitor the Health and Performance of Your Applications

BigML Ops constantly monitors all your production Machine Learning workflows and collects vital signs data that are easily accessible from the BigML Ops dashboard. Model usage patterns, compute resource utilization, uptime, prediction results and accuracy statistics are reported in real time for your DevOps and Machine Learning engineering teams to proactively manage the production performance of thousands of models delivering up to millions of mission-critical predictions.

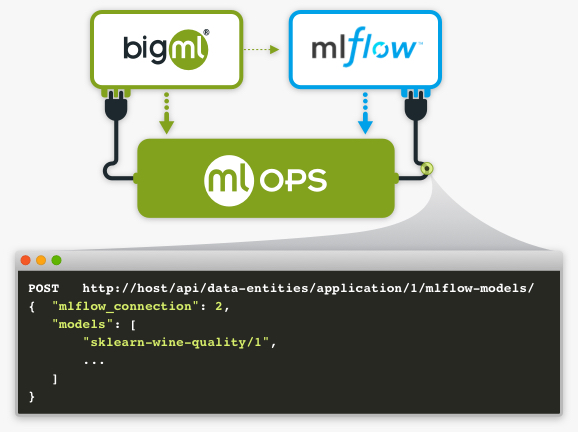

MLFlow Support and Interoperability

MLFlow generated actionable models can be imported into BigML Ops and deployed as part of Dockerized containers to ease the transition path to a more robust ML platform. Imported models benefit from BigML Ops's best practices user experience for administrating, monitoring, alerting and reporting on sophisticated ML workflows in a scalable manner.

Containerized Design Operable in Isolation in any Cloud

BigML Ops composes BigML resources into a deployable Docker container that offers an HTTPS/REST interface for executing your Machine Learning workflows. BigML Ops can be operated independently as a cloud-native application in any cloud as it does not require connectivity to a public or private deployment of BigML.